|

|

Breaking news

[Back to archive]

The latest results achieved by the project consortium.

Visuomotor spatial awareness through concurrent reach/gaze actions

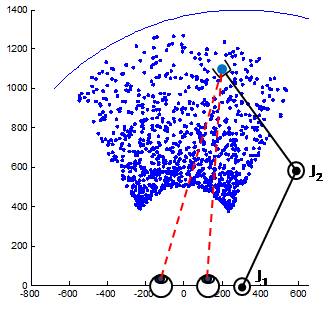

Figure 1: experimental setup implemented to test the concurrent reaching and gazing ability of the robot in order to generate an implicit representation of the peripersonal space obtained by matching head-center and arm-centered schemas.

Eye and arm movements often go together, as we fixate an object before, or while, we reach towards it. Such combination of looking and reaching to the same target can be used to establish an integrated visuomotor representation of spatial awareness.

Coordinated control of eye and arm movements is employed to bring visual, oculomotor and body-centered spatial representations in register with each other. In this work, taking inspiration from primate visuomotor mechanisms, eyes and arms of a humanoid robot are treated as separate effectors that receive motor control via different specific representations, which combine to form a unique, shared visuomotor awareness.

Visual/oculomotor and body-centered representations are maintained through radial basis function networks, chosen because of their biological plausibility and suitability to reference frame transformations. The former allows to transform ocular movements and stereoptic visual information to a head-centered reference frame, but also, when needed, to elicit the eye movements necessary to foveate on a given visual target. The latter links arm movements with an effector-based representation of the space, based on tactile exploration of the environment. Concurrent execution of gaze and reach movements brings the two representations in register, thus generating an implicit integrated representation of the peripersonal space. The two basis function representations have been defined taking inspiration from structures of the primate posterior parietal cortex, but also making them suitable for the integration within the whole model, and especially for the robotic implementation.

As a first implementation of the model, simulated experiments of coordinated reach/gaze actions have been performed, in which tactile information is substituted by visual tracking of the effector. The two implemented RBF nets are capable of bidirectional transformations between stereo visual information and oculomotor (vergence/version) space, and between oculomotor and arm joint space (see figure).

An integrated representation emerges thanks to the simulated interaction of the agent with the environment. Such implicit representation allows to contextually representing the peripersonal space through different vision and motor parameters. Very importantly, thanks to the properties of RBF networks, the transformations are fully reversible, so that representations are both accessed and modified by each exploratory action.

Experiments of concurrent reaching and gazing allow to generate an implicit representation of the peripersonal space obtained by matching head-center and arm-centered schemas. Such representation remains implicit, and far from being an actual map of the environment, it rather constitutes a skill of the robot in interacting with it. The above schema is now being implemented on a real humanoid torso (see Figure 1). Coordinated reach/gaze actions are being used to integrate and match the sensorimotor maps. This learning process is the normal behavior of the agent, constituting the most fundamental component of its basic capability of interacting with the world, and contextually updating its representation of it.

Eris Chinellato, Marco Antonelli, Beata Joanna Grzyb, Angel P. del Pobil

Robotic Intelligence Laboratory

Department of Engineering and Computer Science

Universitat Jaume I de Castellon (UJI)

|

|

|

|

WEBMASTER: Agostino Gibaldi (UG)

|

|